Introduction:

DirectX 9.0 has been with us since December 2002 and we have seen some good progress made since its inception. Possibly the biggest evolution was when Shader Model 3.0 support popped up on NVIDIA’s GeForce 6-series hardware in Spring 2004, but it wasn’t until December that year when Microsoft released the DirectX 9.0c libraries.Each new instance of DirectX has been an evolution of the previous version that brings support for new hardware features, allowing game developers to push the boundaries of realism that little bit further. However, there have been a number of limitations in place that have gotten in the way of developers ever since the API’s invention. With a completely new driver model arriving in Windows Vista, Microsoft’s Direct3D development team decided that the best way forwards was to wipe the slate clean and start from scratch.

DirectX 10 is probably the most important revolution in games development, at least since the introduction of the programmable shader in DirectX 8.0. Because of the way that Microsoft has designed the new driver model, DirectX 10 will only be available for Windows Vista users and there will not be a version released for Windows XP. Along with DirectX 10, Windows Vista will come with DirectX 9.0Ex – this is because pre-DirectX 10 hardware will not work under the new API due to the complete overhaul.

NVIDIA’s DirectX 10 compliant GeForce 8800 series has been the talk of the town since its launch on November 8th and while the chip performs incredibly well in DirectX 9.0 games, DirectX 10 performance is a rather large unknown at the moment. We’re not going to be able to answer that question today, but over the course of this article, we will cover the key decisions made by Microsoft during the development of its new API and then expand into what DirectX 10 is going to mean for gamers.

Windows Display Driver Model:

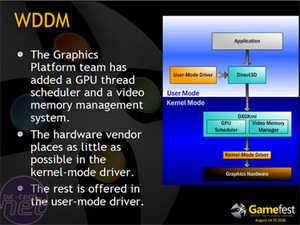

DirectX 10 is a major inflection point for Windows graphics, so Microsoft wanted to make sure that it laid solid foundations – this is where Microsoft’s new driver model comes into play. Although it’s not directly part of DirectX 10, it’s a backgrounder that’s worth covering. Microsoft claims that the Windows Display Driver Model (WDDM) offers “unprecedented stability and performance”. It is a new way of designing drivers - meaning an end to ForceWare and Catalyst Control Center as we know them. Whilst the applications might stay similar on the surface, the backend of display drivers will now be significantly different.In order to achieve this unprecedented stability and performance, Microsoft’s graphics platform team split the driver into two key components: User Mode Driver (UMD) and Kernel Mode Driver (KMD). Instead of having the display driver sitting in a secure part of the operating system’s kernel, Microsoft has moved as much of the code out into the User Mode portion of the driver as possible. This allows many more features to be implemented directly into the pipeline.

With WDDM, Microsoft has also completely virtualised the graphics hardware with the introduction of GPU threading and video memory virtualisation. GPU threading or scheduling is exactly what it suggests – it means that you can have multiple processes sharing the GPU’s capabilities at any point in time.

In Windows XP, the driver dictates that you can use a single application without running into occasional problems like a DEVICE_LOST scenario during a display mode change – this is essentially where a 3D application would freeze if you switched to another 3D application. With Windows Vista, the desktop is a 3D environment, meaning that you’d end up with application crashes much more often without threading and scheduling advancements.

The need for WDDM was highlighted by this and the fact that Vista makes use of lots of 3D applications at any one point in time. This is especially true with the emergence of General Purpose GPU (GPGPU) applications that make use of the GPU’s massive parallelism. Both ATI (now a Division of AMD) and NVIDIA are working towards utilising the GPU for more than just 3D graphics. In fact, we’ve already seen AMD’s Stream Computing Initiative accelerate the Folding@Home client, while NVIDIA has announced its CUDA Technology on GeForce 8-series video cards. Both companies have talked about physics accelerations on the GPU via Havok FX, too.

Aside from GPU threading, WDDM can improve performance by using system memory as video memory through virtualisation. This basically means that the display driver has access to ‘infinite’ memory capacities by virtue of the fact it can swap data from high-speed video memory into system memory as and when a 3D application requires more memory.

Finally, Microsoft has worked on crash recovery – WDDM is much more efficient at rescuing driver and/or hardware failures. If the display driver crashes, WDDM will just kill the process responsible for the crash, rather than causing the obligatory blue screen of death or operating system hang.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.