Ever since the day AMD confirmed that it had bought ATI, there has been talk about what place there would be left for Nvidia in the industry. And with Intel announcing its intention to enter the discrete graphics card market with its Larrabee project at this month's IDF in Shanghai—merely confirming our thoughts at IDF San Francisco in September last year—it was clear that Nvidia was being put under pressure from all sides.

There has been propaganda coming from both AMD and Intel about the demise of Nvidia and some of the statements made by high-level Intel executives at IDF just a few weeks ago prompted a reaction from Nvidia at its Financial Analyst's Day on Thursday last week. The response came from none other than Nvidia's CEO and President Jen-Hsun Huang – a man with an incredible passion for the business he created.

He used the Financial Analyst Day as a catalyst for addressing some of the statements made about his company that he claimed were false. He spent nearly two hours during the morning session ranting about Intel's false claims and propaganda, and then more time in the four-hour afternoon session talking about how Nvidia will out-innovate its competitors to reinvent the future.

As a result of this, we felt the nature of this particular Nvidia Analyst Day deserved much more than just a short blurb in the news section – the comments were strong and the gloves came right off during Huang's presentation of what he believes are the real facts about the future of the industry as it enters the visual computing era. So, without further ado, let’s have a look at what was said and why.

Gelsinger believes that the industry needs a programmable, ubiquitous and unified architecture... like Larrabee. This is of course convenient, but he seems to forget that we've had very programmable and unified architectures ever since the launch of the GeForce 8800 GTX in November 2006.

Gelsinger believes that the industry needs a programmable, ubiquitous and unified architecture... like Larrabee. This is of course convenient, but he seems to forget that we've had very programmable and unified architectures ever since the launch of the GeForce 8800 GTX in November 2006.

DirectX 10 was an inflection point for graphics cards, because it is the first time that shader units have become fully generalised processing 'cores'. Although it's worth remembering that they're not quite as flexible as a CPU core in their current design.

"Here's somebody who's new to our industry and he's basically telling us that 3D graphics as we know it is dead," said Huang. "This is the most inspirational quote I can imagine giving to our employees. Nothing fires us up more than that. I'm sure that's what he intended – getting the most intensively competitive company in technology fired up. We're pretty fired up anyway, as we're pretty passionate about what we do. The fact that the statement is just plain wrong means it's pointless to argue about it."

It could be said that even Andrew Chien, Intel's Director of Research, agrees to an extent with Huang's assessment of Gelsinger’s sensational statement, although to say that he agrees completely would be a bit of a stretch. Chien believes that ray tracing will not make an immediate transition into graphics engines – instead, it’ll gradually be introduced for certain effects. “We expect [ray tracing] to first penetrate areas where the additional flexibility is of benefit to developers. If the image quality benefits are there, but performance isn’t acceptable, developers aren’t going to use it,” he said during an interview at IDF Shanghai.

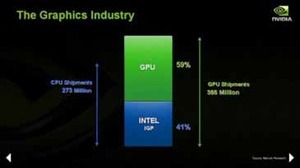

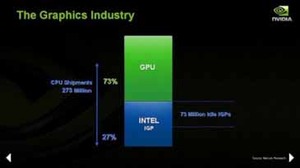

Following the complete dismissal of Gelsinger's statement, Huang then started talking about Intel's graphics market share – as Intel believes that the Integrated Graphics Market will continue to grow, therefore meaning that the demand for discrete GPUs will drop, essentially reducing the demand for Nvidia's products. He had his theories about Intel's market share in the graphics industry, suggesting that customers buying a CPU often bought a chipset with "free" graphics attached to it – Intel's revenue shows that the chipsets are a small part of their earnings, but they represent a large portion of their fab space.

With the CPU integrating a graphics core on both Intel's and AMD's next-generation processors, it's easy to understand how the integrated graphics market will grow – whether those graphics cores will be used is another question altogether though. He told analysts to "remember how market share is calculated." In other words, for a unit to count in the market share figures, it doesn't have to be used – many Integrated Graphics Chipsets aren't used because many end users have bought a discrete GPU to satisfy their game playing needs. Moving the graphics core to the CPU means an even greater proportion of them won't be used, according to Huang.

There has been propaganda coming from both AMD and Intel about the demise of Nvidia and some of the statements made by high-level Intel executives at IDF just a few weeks ago prompted a reaction from Nvidia at its Financial Analyst's Day on Thursday last week. The response came from none other than Nvidia's CEO and President Jen-Hsun Huang – a man with an incredible passion for the business he created.

He used the Financial Analyst Day as a catalyst for addressing some of the statements made about his company that he claimed were false. He spent nearly two hours during the morning session ranting about Intel's false claims and propaganda, and then more time in the four-hour afternoon session talking about how Nvidia will out-innovate its competitors to reinvent the future.

As a result of this, we felt the nature of this particular Nvidia Analyst Day deserved much more than just a short blurb in the news section – the comments were strong and the gloves came right off during Huang's presentation of what he believes are the real facts about the future of the industry as it enters the visual computing era. So, without further ado, let’s have a look at what was said and why.

Getting straight to the point

The cold war between the two companies reached boiling point during Pat Gelsinger's opening keynote in Shanghai, China, where he boldly claimed that "First, graphics that we have all come to know and love today. I have news for you. It's coming to an end. Our multi-decade old 3D graphics architecture that's based on a rasterisation approach is no longer scalable and suitable for the demands of the future." Gelsinger believes that the industry needs a programmable, ubiquitous and unified architecture... like Larrabee. This is of course convenient, but he seems to forget that we've had very programmable and unified architectures ever since the launch of the GeForce 8800 GTX in November 2006.

Gelsinger believes that the industry needs a programmable, ubiquitous and unified architecture... like Larrabee. This is of course convenient, but he seems to forget that we've had very programmable and unified architectures ever since the launch of the GeForce 8800 GTX in November 2006. DirectX 10 was an inflection point for graphics cards, because it is the first time that shader units have become fully generalised processing 'cores'. Although it's worth remembering that they're not quite as flexible as a CPU core in their current design.

"Here's somebody who's new to our industry and he's basically telling us that 3D graphics as we know it is dead," said Huang. "This is the most inspirational quote I can imagine giving to our employees. Nothing fires us up more than that. I'm sure that's what he intended – getting the most intensively competitive company in technology fired up. We're pretty fired up anyway, as we're pretty passionate about what we do. The fact that the statement is just plain wrong means it's pointless to argue about it."

It could be said that even Andrew Chien, Intel's Director of Research, agrees to an extent with Huang's assessment of Gelsinger’s sensational statement, although to say that he agrees completely would be a bit of a stretch. Chien believes that ray tracing will not make an immediate transition into graphics engines – instead, it’ll gradually be introduced for certain effects. “We expect [ray tracing] to first penetrate areas where the additional flexibility is of benefit to developers. If the image quality benefits are there, but performance isn’t acceptable, developers aren’t going to use it,” he said during an interview at IDF Shanghai.

Following the complete dismissal of Gelsinger's statement, Huang then started talking about Intel's graphics market share – as Intel believes that the Integrated Graphics Market will continue to grow, therefore meaning that the demand for discrete GPUs will drop, essentially reducing the demand for Nvidia's products. He had his theories about Intel's market share in the graphics industry, suggesting that customers buying a CPU often bought a chipset with "free" graphics attached to it – Intel's revenue shows that the chipsets are a small part of their earnings, but they represent a large portion of their fab space.

With the CPU integrating a graphics core on both Intel's and AMD's next-generation processors, it's easy to understand how the integrated graphics market will grow – whether those graphics cores will be used is another question altogether though. He told analysts to "remember how market share is calculated." In other words, for a unit to count in the market share figures, it doesn't have to be used – many Integrated Graphics Chipsets aren't used because many end users have bought a discrete GPU to satisfy their game playing needs. Moving the graphics core to the CPU means an even greater proportion of them won't be used, according to Huang.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.