The Hardware Hall of Fame

In this country, we tend to be modest – and if not, then there’s usually a hint of apology, sarcasm or irony in our triumphalism. If Muhammad Ali had come from Kent instead of Kentucky, can you imagine him saying, "I’m not the greatest; I’m the double greatest. Not only do I knock ’em out, I pick the round"? No; he’d probably say that he was "pretty good" and shrug. There’s a reason why we don’t have cheerleaders during half-time at Yeovil vs Brentford, drive tank-sized SUVs to take us three minutes down the road and can, with a straight face, present the Ashes urn – which is roughly the size of a Mars bar – as a prize for a serious international sporting event. This isn’t to say that this is always a good thing.Being overly modest can result in genius going unappreciated, chances being wasted and the lessons of the past being forgotten. While we aren’t suggesting replacing the Ashes with a hulking great piece of silverware the size of ice hockey’s Stanley Cup, we think there’s something in the American idea of celebrating the best, the brightest and most brilliant with a Hall of Fame.

The idea is that for a given field, whether it’s ice hockey or rock’n’roll, a panel of experts vote on the absolute best of the best, entering them into the Hall of Fame in recognition of the fact that they’re brilliant examples of how things should be done. Given how many products we see that still make obvious mistakes, we think the idea of lauding those rare few that are paragons of great design and technology has real merit.

We aren’t going to build an actual Hall, as with other Halls of Fame, but we intend to return to our Hall of Fame fairly regularly and ‘vote in’ new candidates. We’ll allow votes via the site, and use discussions on the site to determine which products really deserve the laurels.

So, without further ado, here are the inaugural inductees to our hall of fame - and don't forget to find the shameful entries into the Hut of FAIL either!

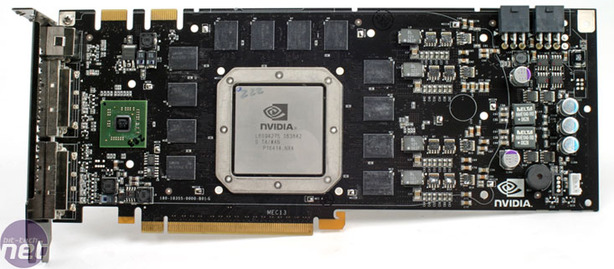

Nvidia GeForce 8800 GTX

Launched October, 2006Inducted for Proving that high-end graphics cards can have a decent life span

Buying a top-of-the-range graphics card at launch is a pastime for those who are cavalier with their money and sanity but demand the fastest performance available, with absolutely no doubt. However, it’s a given that something newer, bigger, hotter and faster will arrive, and usually very soon.

All hail the king, G80

Top-of-the-range graphics cards are one-hit wonders – but not in the case of the GeForce 8800 GTX. The 8800 GTX was a surprise arrival in late 2006. Given that ATI had, in 2005, designed the first GPU with a unified shader architecture with the Xenos chip inside the Xbox 360, few people expected Nvidia to beat the red team to the punch when it came to the PC, but it did just this. The GeForce 8800 GTX was the first PC GPU with unified shaders: gone were the fixed pixel and vertex pipelines, and in their place came stream processors, flexible ALUs that can be dynamically allocated to work on any task a 3D engine requires, such as geometry, texturing or lighting.

This move allowed the GeForce 8800 GTX to be the first DirectX 10-compatible GPU, but while it was a radical leap forward, the card was still fast in DirectX 9 games. This turned out to be an important factor – even now, three years on, worthwhile DX10 games are few

and far between.

The design was good, but Nvidia also got the clock speeds and memory configuration right: a 575MHz GPU with 1.35GHz stream processors talking to 768MB of GDDR3 over a 384-bit-wide interface still sounds good – even the Radeon HD 5870 only has a 256-bit memory interface (although ATI’s newest card has 40 per cent faster memory bandwidth thanks to its quicker GDDR5 memory).

When we last tested the 8800 GTX, just over a year ago, we found that its design had held up remarkably well – Fallout 3 at 1,920 x 1,280 and Far Cry 2 at 1,680 x 1,050 were within reach, and we concluded that against the GeForce 9-series, GTX 200-series and ATI’s 4800s, there was no point in upgrading.

A year on, the gaming landscape hasn’t changed dramatically and while it’s likely that some GeForce 8800 GTX owners will have kept up their habit of buying top-of-the-range cards, those that have stuck with the original will have found that, rather than growing old disgracefully, and forcing you to forgo high resolutions and decent settings, the 8800 GTX is still extremely capable.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.