Does Nvidia have a future?

Over the next few months, we’re going to be looking at the future prospects of some of the key companies in the technology industry. To start the series off, we’re looking at one of the most hotly debated companies in recent times, Nvidia.Ever since its archrival ATI was acquired by AMD in October 2006, some commentators have believed that the writing has been on the wall for Nvidia. But the question is: is the writing on the wall for the graphics maker, or is the company on the cusp of a period of massive growth?

The biggest question - and one brought sharply into focus by AMD's buyout of ATI - was Nvidia’s CPU situation. Nvidia doesn’t have an x86 license, which means it is unable to compete with Intel and AMD when it comes to making x86 CPUs. And with CPUs and GPUs seemingly on a collision course, some believe that an x86 license will be an essential part of Nvidia’s long term survival plan.

AMD and Intel are both placing a renewed focus on platformisation – a term that Nvidia itself has used quite extensively in the past. The x86 instruction set may be a key part of that going forwards and lacking access to it may leave Nvidia in troubled waters... but on the other hand it may not be a problem. As we'll see, certain changes in the tech industry have arguably left the traditional alliance of x86 CPUs and Microsoft Windows more vulnerable that any point in the last ten years.

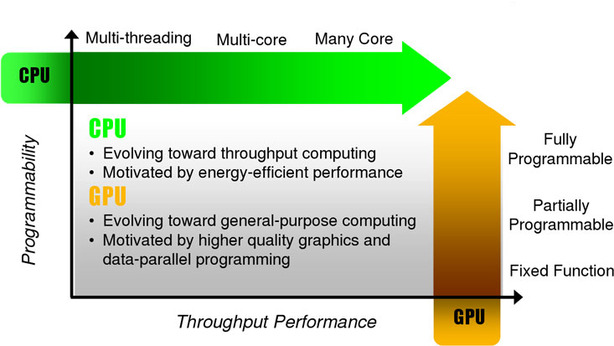

Nvidia finds itself at the intersection of many interesting trends, the most obvious being chip design.Take CPUs: they've have always been very programmable, but they hit a brick wall in terms of frequency during the Pentium 4 era, which led to both AMD and Intel deciding that the only real way forwards was to parallelise, with many-core chips.

HyperThreading had already existed long before Intel made the decision to increase core counts and there were clear benefits to this technology. After HyperThreading came the first true dual-core chips and quad-cores followed soon after. More recently, we’ve seen hex- and octa-core CPUs, which are currently aimed at server and high-performance computing markets. To take things one step further, Intel’s upcoming Larrabee processor looks, design-wise, like a cross between a CPU and a GPU – its tens of simple (but heavily vectorised) cores will feature x86 extensions so that it can theoretically run code that already exists after a simple recompile with the Larrabee compiler.

On the other hand, the GeForce 8800 GTX was the first major stepping stone in this direction thanks to its underlying CUDA-compatible architecture. G80 was effectively a massively parallel vector processor and so developers, as well as writing graphics code for it, could also write applications in a C language (known as C for CUDA) with a few extensions. As a result, developers could theoretically, write any kind of application and it could run on a GPU, and as long as the code was highly parallel - i.e. suitable to run over the GPUs many stream processors - good results would follow.

The question this raises, of course, is whether x86 matters to developers going forwards or whether it’s merely there for backwards compatibility. Nvidia hopes that it’s the latter because, at least in systems where x86 instructions are needed, there will be an x86 CPU as well as a GPU, or heavily parallel processor.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.