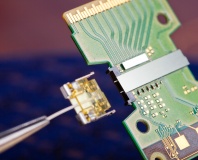

On the morning of Day Zero at IDF Mario Paniccia, an Intel Fellow and Director of the Photonics Technology Lab, told attendees that Intel has developed a silicon- and germanium-based photo detector that can detect laser light signals at speeds of over 40 Gbits/sec.

At this speed, the company claims that it is the world’s best performing Silicon Germanium photo detector.

Photo detection, or fibre optic communication as many of you will know it as, involves the process of converting optical data into electrical data. In the past though, fibre optics got a reputation for being an expensive and specialised solution to the problem.

This prompted Intel to start researching silicon photonics, which involves manufacturing fibre optic components from silicon. While silicon is opaque in the visible spectrum of light, it’s transparent at infrared wavelengths, meaning it can guide light. However, silicon cannot absorb light, which presents a problem.

In order to convert the infrared light guides into detectors, Intel’s researchers have added a thin layer of germanium to the device. The reason germanium was chosen is because of its absorption properties for the wavelengths (around 1.3 to 1.6 microns) Intel is interested in, and also the fact that it is a CMOS material that has previously been used in semiconductor processing.

If we dive deeper into the science behind the materials used for this project, Intel revealed that a crystal structure of germanium is four percent larger than silicon. This alone can introduce additional strain and a loss in performance when germanium is grown on silicon.

If the growth process is too relaxed, you end up with dislocations in the crystal structure – this is what causes the loss in performance because the amount of dark current has increased. Intel says that it has optimised this growth process to ensure that the impact of the defects is kept to a minimum.

When quizzed about when we can expect this technology to be commercially available, Paniccia said that he expects to see devices using the technology before the end of the decade. With that said though, he was pretty vague with exactly what type of device we can expect to see using this technology – I think it’s fair to say we won’t be seeing CPUs using this technology for a while.

Discuss in the forums

At this speed, the company claims that it is the world’s best performing Silicon Germanium photo detector.

Photo detection, or fibre optic communication as many of you will know it as, involves the process of converting optical data into electrical data. In the past though, fibre optics got a reputation for being an expensive and specialised solution to the problem.

This prompted Intel to start researching silicon photonics, which involves manufacturing fibre optic components from silicon. While silicon is opaque in the visible spectrum of light, it’s transparent at infrared wavelengths, meaning it can guide light. However, silicon cannot absorb light, which presents a problem.

In order to convert the infrared light guides into detectors, Intel’s researchers have added a thin layer of germanium to the device. The reason germanium was chosen is because of its absorption properties for the wavelengths (around 1.3 to 1.6 microns) Intel is interested in, and also the fact that it is a CMOS material that has previously been used in semiconductor processing.

If we dive deeper into the science behind the materials used for this project, Intel revealed that a crystal structure of germanium is four percent larger than silicon. This alone can introduce additional strain and a loss in performance when germanium is grown on silicon.

If the growth process is too relaxed, you end up with dislocations in the crystal structure – this is what causes the loss in performance because the amount of dark current has increased. Intel says that it has optimised this growth process to ensure that the impact of the defects is kept to a minimum.

When quizzed about when we can expect this technology to be commercially available, Paniccia said that he expects to see devices using the technology before the end of the decade. With that said though, he was pretty vague with exactly what type of device we can expect to see using this technology – I think it’s fair to say we won’t be seeing CPUs using this technology for a while.

Discuss in the forums

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.