In the hopes of turning more manufacturers on to its Tegra line of mobile-oriented chipsets, Nvidia has released a whitepaper that claims multi-core computing to be the future for smartphones and tablets - and even makes the claim that multiple cores can save, rather than cost, you power.

The paper, entitled 'Benefits of Multi-core CPUs in Mobile Devices,' predicts that dual-core processors will become the norm for mobile devices in 2011, and that quad-core chips will arrive shortly after.

It's not all about pushing more processing power into a device, however: the company claims that 'mobile devices like smartphones and tablets benefit even more from multi-core architectures because the battery life benefits are so substantial.'

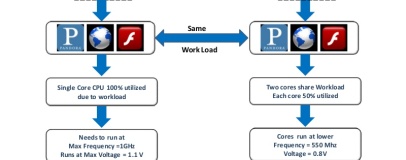

To demonstrate how such a seemingly contradictory stance - adding additional cores resulting in lower power usage - is possible, Nvidia takes the example of a pair of identical mobile devices: one runs a single-core ARM Cortex-A9 chip, while the other features a dual-core ARM Cortex-A9 chip. In both cases, the processors are identical in specification aside from the number of processing cores.

In the paper, Nvidia argues that under a complex workload - running Internet radio, web browsing, and playing Flash content - the single-core chip will run at 100 percent utilisation and have to run at the maximum frequency of 1GHz while drawing 1.1V. Nvidia rates the power draw of the chip as P.

For the same workload on the dual-core CPU, however, the tasks can be shared, leaving each core at 50 percent utilisation. Because the CPU isn't maxed out, it can be run at the slower speed of 550MHz to achieve the same workload - meaning a drop in voltage to 0.8V.

Overall, Nvidia claims, the dual-core processor has a power draw under the same workload of 0.6P - or a 40 percent saving over a single-core chip.

While the rest of the white paper - which you can download as a PDF from Nvidia - concentrates on selling the benefits of Nvidia's Tegra 2 platform specifically, the power saving claims should be of interest to all.

Are you convinced by Nvidia's calculation, or do you doubt that a simple switch to a dual-core architecture can yield such impressive power savings? Share your thoughts over in the forums.

The paper, entitled 'Benefits of Multi-core CPUs in Mobile Devices,' predicts that dual-core processors will become the norm for mobile devices in 2011, and that quad-core chips will arrive shortly after.

It's not all about pushing more processing power into a device, however: the company claims that 'mobile devices like smartphones and tablets benefit even more from multi-core architectures because the battery life benefits are so substantial.'

To demonstrate how such a seemingly contradictory stance - adding additional cores resulting in lower power usage - is possible, Nvidia takes the example of a pair of identical mobile devices: one runs a single-core ARM Cortex-A9 chip, while the other features a dual-core ARM Cortex-A9 chip. In both cases, the processors are identical in specification aside from the number of processing cores.

In the paper, Nvidia argues that under a complex workload - running Internet radio, web browsing, and playing Flash content - the single-core chip will run at 100 percent utilisation and have to run at the maximum frequency of 1GHz while drawing 1.1V. Nvidia rates the power draw of the chip as P.

For the same workload on the dual-core CPU, however, the tasks can be shared, leaving each core at 50 percent utilisation. Because the CPU isn't maxed out, it can be run at the slower speed of 550MHz to achieve the same workload - meaning a drop in voltage to 0.8V.

Overall, Nvidia claims, the dual-core processor has a power draw under the same workload of 0.6P - or a 40 percent saving over a single-core chip.

While the rest of the white paper - which you can download as a PDF from Nvidia - concentrates on selling the benefits of Nvidia's Tegra 2 platform specifically, the power saving claims should be of interest to all.

Are you convinced by Nvidia's calculation, or do you doubt that a simple switch to a dual-core architecture can yield such impressive power savings? Share your thoughts over in the forums.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.