RT Cores and the ray tracing workloads they enable are the most important change when it comes to Turing, and the final key part of the hardware puzzle. Inspiring a move from GTX to RTX nomenclature, RT Cores are a big deal. Other manufacturers have produced ray tracing GPU hardware before, but no-one with as big a say in the industry as Nvidia, and never at a time when ray tracing has been introduced to a major API like DirectX with DXR (it’s also coming to Vulkan).

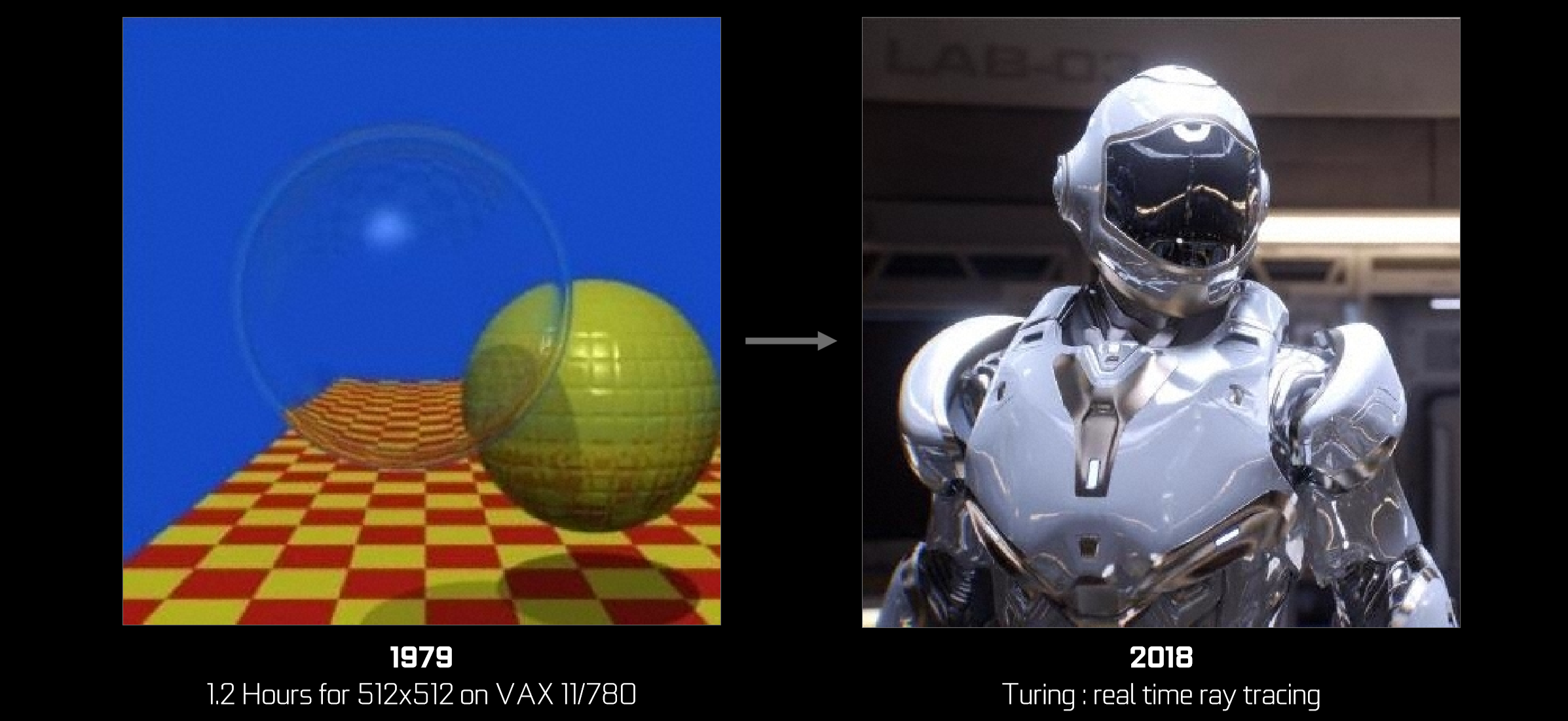

Ray tracing is often referred to as the holy grail of lighting in artificial environments, as it is what most accurately reflects what goes on in the world as we know it – rays of light hitting and interacting with objects. There’s a good reason why CGI in top-budget films uses ray tracing: When it comes to realism, it can’t be beat.

Games, however, have traditionally had to rely on “pre-baked” lighting via rasterisation, since the computational cost of constantly tracing thousands or more of rays through a scene in real-time has always been far too high. Developers have gotten pretty good at simulating light, but there are still always visual downsides to different methods, and doing it well is complicated and thus costly in time and resources. Another appeal of ray tracing, then, is its simplicity: If you can accurately model the light sources and objects in a scene, ray tracing simply works out how it all interacts in a responsive and dynamic way, producing accurate reflections, refractions, and shadows as it does so. As modelling and performance improves, things, as they say, can only get better. RT Cores are the first major step toward real-time ray tracing, but understanding what they’re doing involves a closer look at current ray tracing methods.

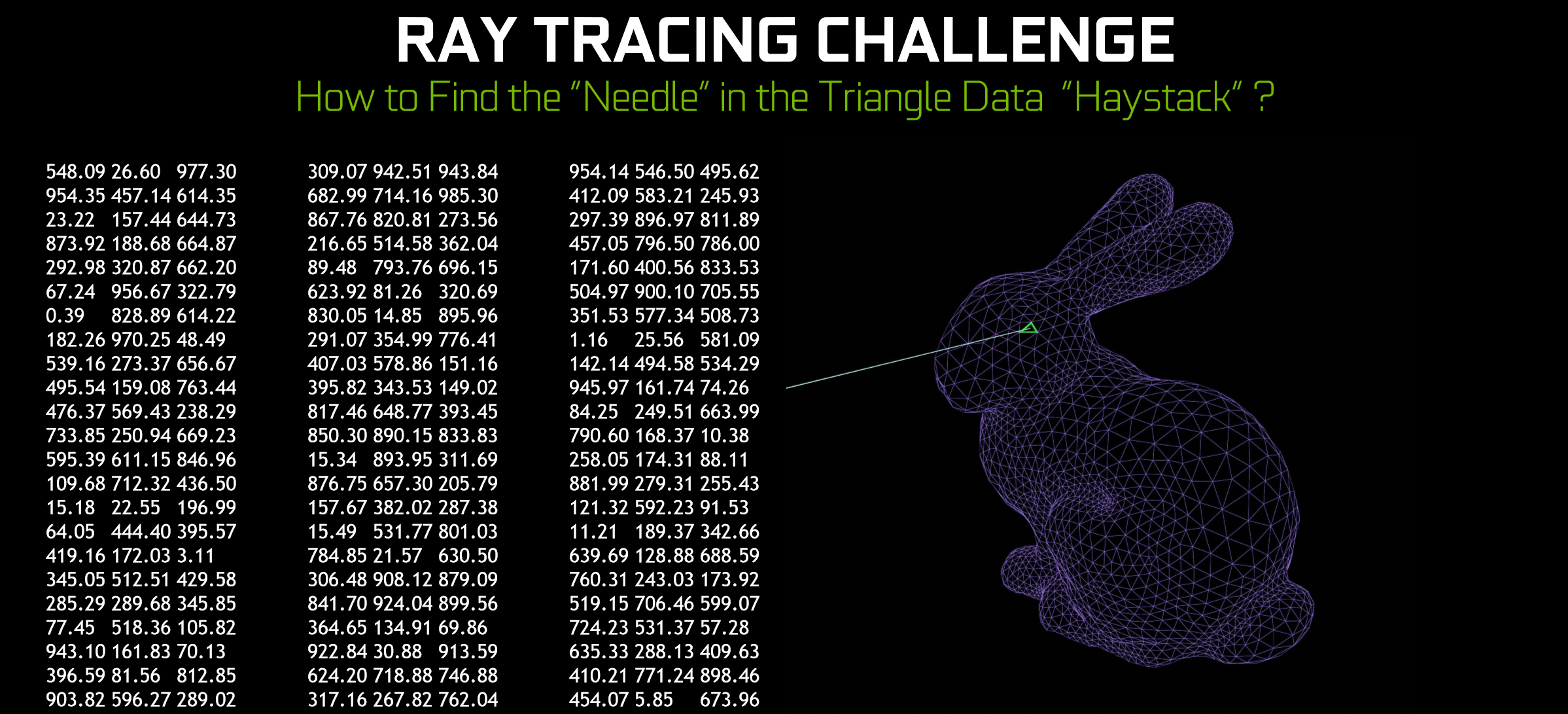

Even in CGI, you don’t want to waste resources (energy, time, money), and one easy way to cull computation when it comes to ray tracing is focusing only on what you see. As such, we have a process called ray casting, which is like backwards ray tracing in that rays are shot from a simulated viewing point (your eye position) into a scene through the pixels. As the rays hit objects, bounce off them, and find their way to a light source, variables like distance travelled, colour/material properties of objects, are calculated and used to inform the final colour of the pixels. Objects in a 3D world are made of up of primitives, so ray casting involves figuring out if a ray will hit a primitive and, if so, which one(s). A given scene can have an astonishingly high number of primitives, even when limited to the visible area, so additional trickery is needed.

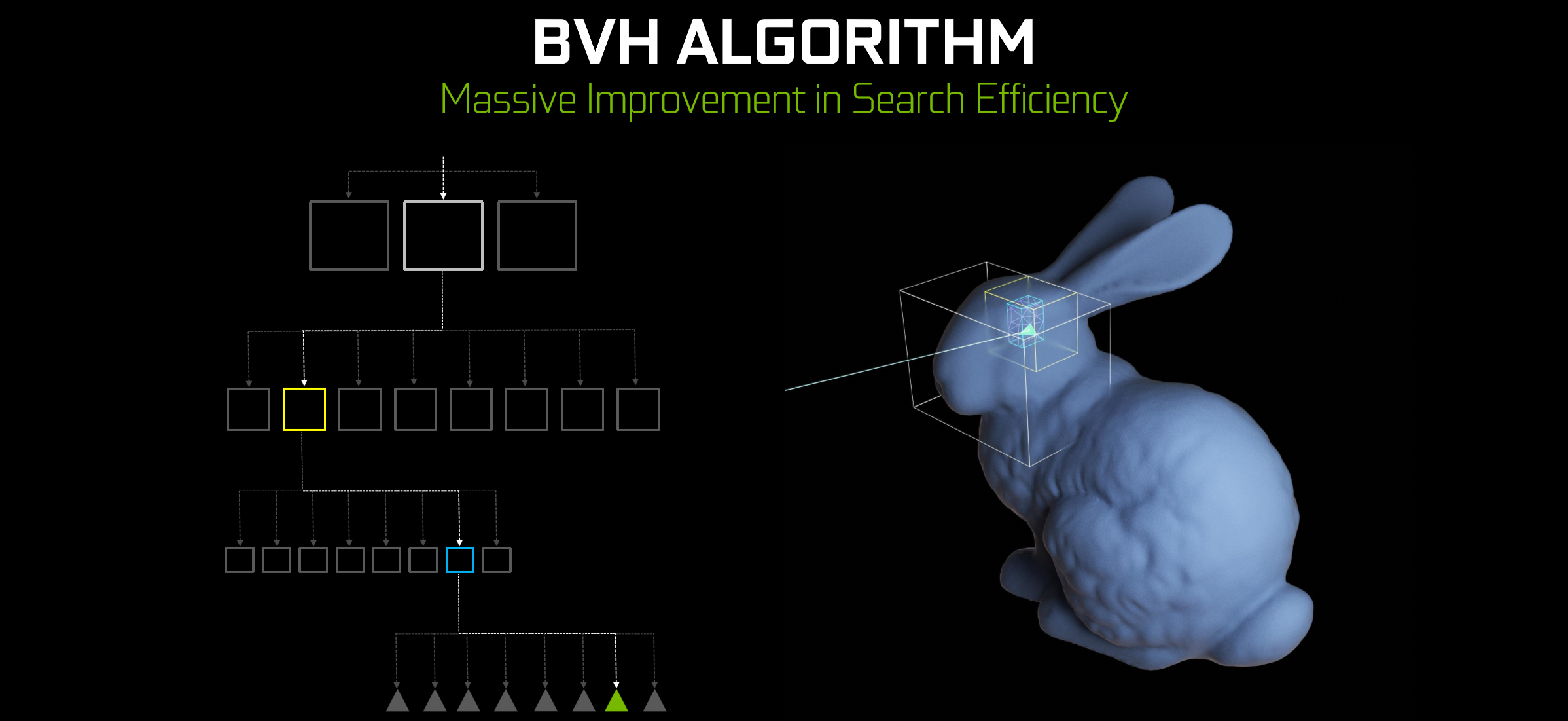

That trickery is known as a Bounding Volume Hierarchy (BVH). A BVH is a means of grouping geometry in progressively larger groups. As such, primitives (triangles) are grouped and then bound by a box, groups of which are merged into a larger box, and so on. Now, instead of having to perform an intersection test on an impossible number of triangles individually, only a small number of large boxes get tested. Once there’s a hit, the intersection test process repeats but only for the boxes within that box, and so on until the final level is reached, at which point the remaining primitives are tested for intersection.

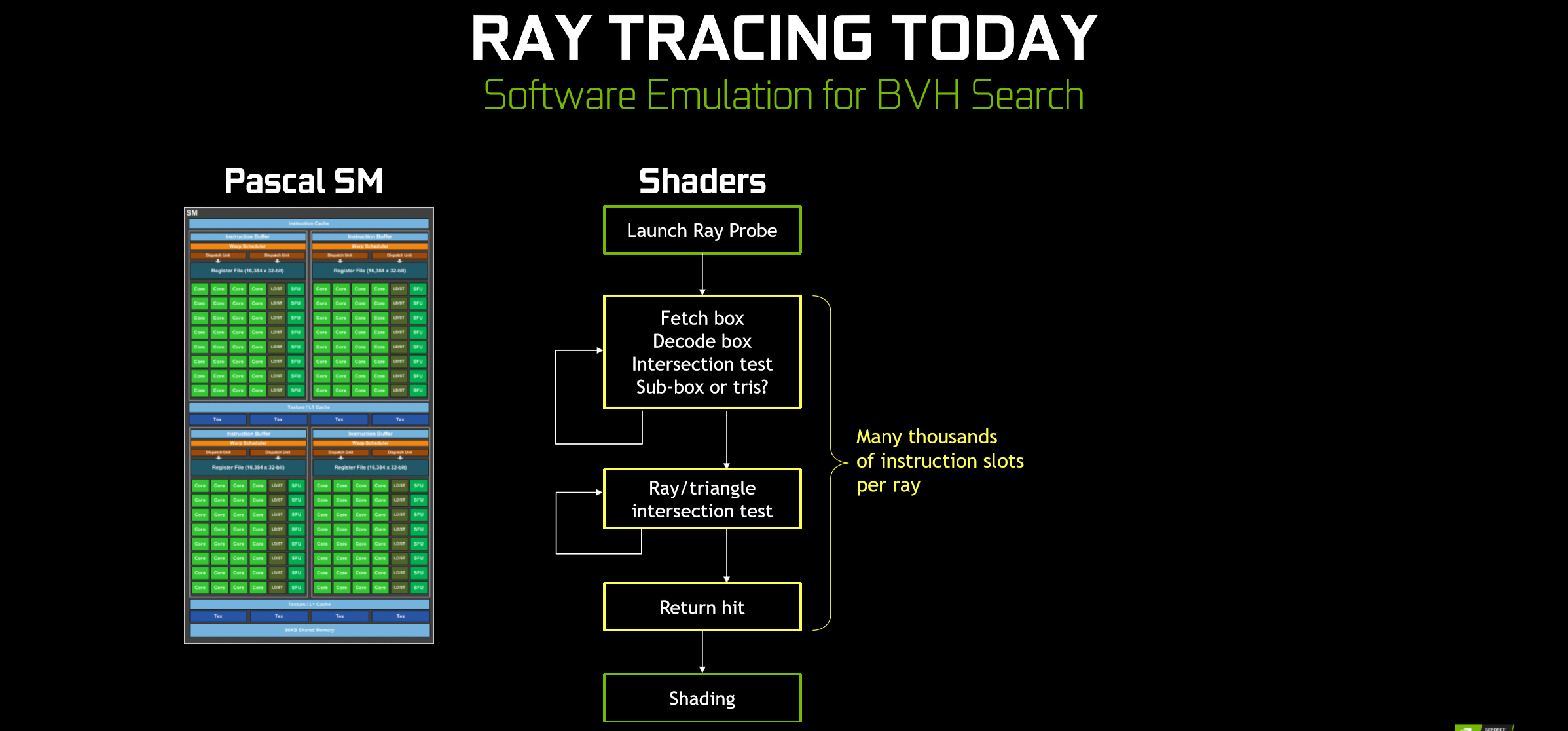

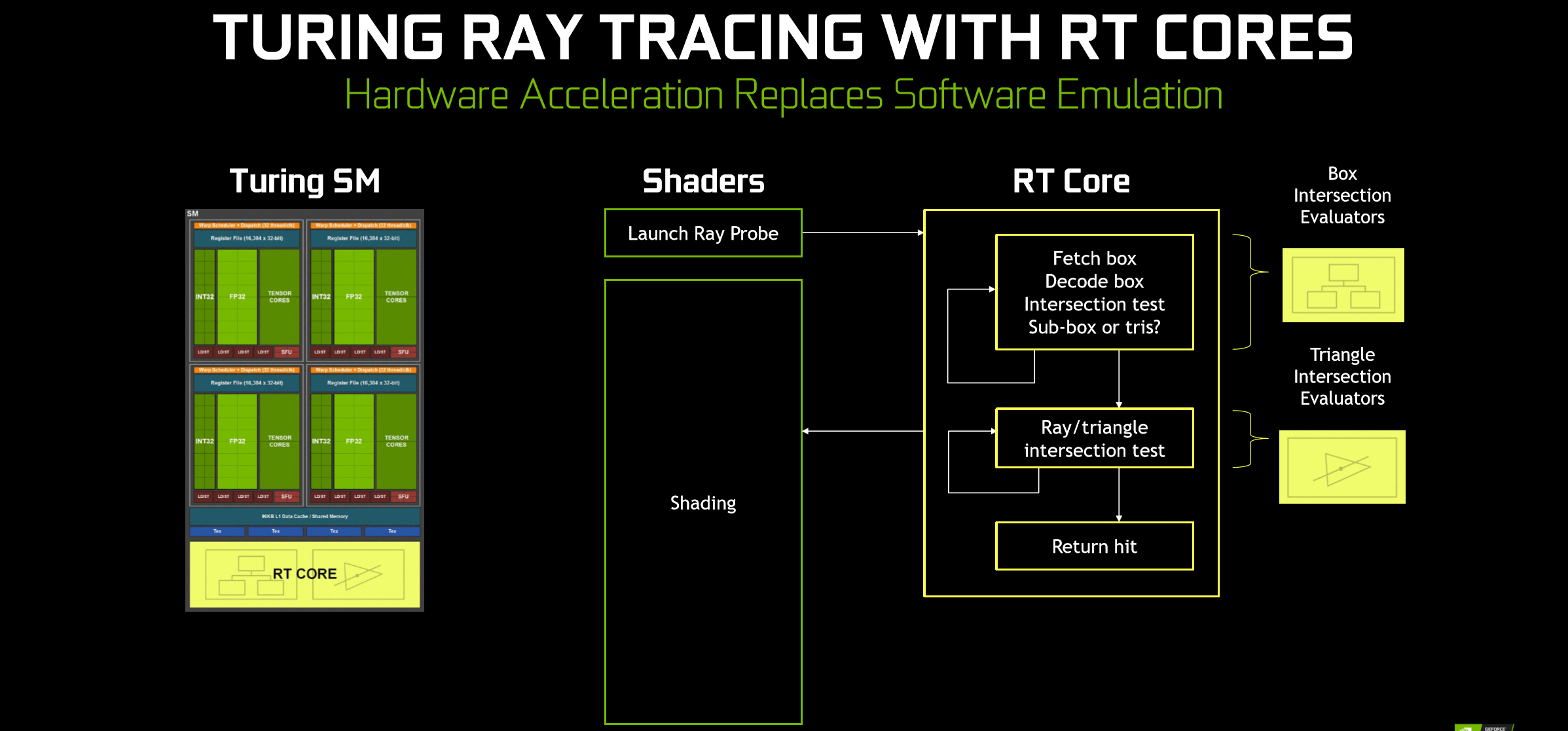

In games, creating and traversing BVH structures is certainly possible with today’s drivers and shader hardware, but even with a highly efficient BVH structure you’re looking at thousands of instructions per ray, one after the other, until a hit is found, and thus a significant stall in the pipeline. RT Cores, though, vastly accelerate two processes: box intersection tests, and triangle intersection tests. In Turing, then, the regular shaders now only need to launch a single ray probe call and then perform accurate shading only on the returned primitive (or use background colour if no primitive is returned), or potentially launch secondary rays further into the scene.

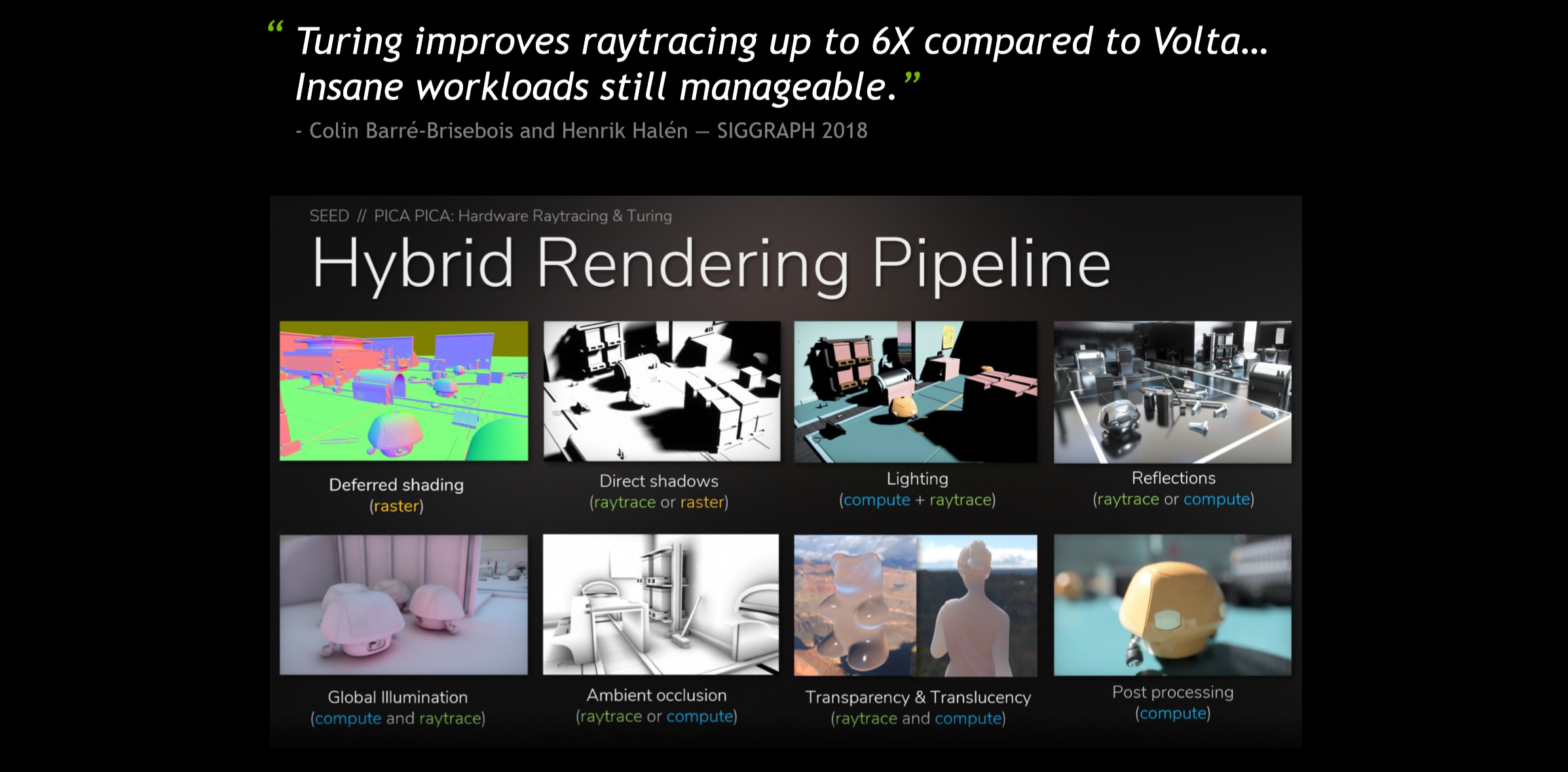

Another important point is that ray tracing isn’t supplanting all lighting and effects in a scene. It is simply another tool in the developer’s arsenal, and Nvidia intends for it to be used in conjunction with traditional rasterisation techniques. For instance, rays can be launched directly from object surfaces to figure out more accurate reflections, refractions, and shadows, since it’s already easy to figure out object visibility using current methods without casting the first ray from the viewpoint. While RT Cores do accelerate ray tracing workloads to the point that they can now be performed in real-time on a single GPU, this is far from global illumination, as there is still a performance hit and a limit on total rays cast (based on a variety of factors) that devs will need to account for when picking how best to utilise the hardware.

As well as RT Cores, support for ray tracing in APIs, and driver-enabled BVH creation and refitting, one final piece of the tray tracing pie is a denoising filter that helps minimise the number of rays needed and fill in the gaps between them convincingly.

To compare performance, Nvidia ran its GTX 1080 Ti and RTX 2080 Ti through ray tracing-intensive workloads, and found that the former could cast on average 1.1 Giga Rays per second compared to around 10 Giga Rays per second on the RTX 2080 Ti, hence the claim ‘~10x faster than 1080 Ti’. Of course, performance when it comes to ray tracing in games is yet another unknown entity at this stage, and again the data is going to be pretty much impossible to gather in time for the launch on account of there being very few games with confirmed RTX support and even fewer (zero) with it built in or enabled at the moment.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.