Introduction

Throughout the history of technology, companies have made claims about products which have turned out, in retrospect, to be inaccurate. Some of them have been small quibbles - the performance of cache in a CPU, or a promised feature that didn't make it into a software package.Some, on the other hand, have been spectacular misses.

This is a celebration of those claims that have turned out to be so fantastically wrong. We hope you enjoy this trip down memory lane...

Intel - the Pentium 4 will hit 10GHz

The Pentium processor has been one of the biggest names of the last decade. It launched in 1993, and the name came from the fact that it was the fifth-generation x86 architecture chip from Intel.The chip had several successors, handily named the Pentium 2, Pentium 3 and Pentium 4. All scaled the amount of transistors, clock speed and cache included on the chip, theoretically scaling the power and speed at the same time.

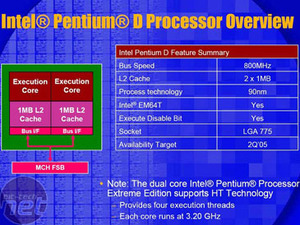

The Pentium 4 was released in November 2000, with the first revision codenamed 'Willamette'. It employed a microarchitecture called NetBurst, which was designed to provide the fastest, most efficient processing for the programmes of the time. Included in NetBurst was a rapid execution engine, where the ALUs in the CPU actually operated at double the frequency of the core clock, and Hyper Pipelined technology, which was the extra-long number of stages in the processing pipeline - Willamette had 20 stages whilst Prescott, the last revision of the Pentium 4, had an amazing 31.

The Pentium 4 was heralded as a chip which would be at the heart of computers for a decade, scaling up to 10GHz by following Moore's Law.

In an article for PC World, Intel's George Alfs was quoted saying:

- "Intel's P4 at 2 GHz is the world's highest performance desktop processor... P4's NetBurst architecture has the ability to scale to 10 GHz in its lifetime."

Even better were the articles that attempt to compare the Pentium 4 to the Pentium 3. We now know, of course, that the P4's monstrously long pipeline was responsible for terrible heat problems and some dire performance. But back at the launch in 2000, Intel was playing down the drop in efficiency that the P4 represented - even though, as we know now, Intel has reverted back to a Pentium 3-like architecture with Core Duo. The quotes comparing the two are amusing:

- "A longer pipeline makes for higher clock speed. But does it sacrifice performance? The answer is yes and no.

Asked if a Pentium III would outperform a Pentium 4 at the same clock speed, Intel's Albert Yu said, "It's technically correct, but it's artificial."

Forthcoming Pentium III chips based on 0.13-micron process -- which are expected to reach speeds as high as 1.4GHz -- would outperform the Pentium 4 chips due out this fall on 0.18-micron technology. But Yu said that was an apples-to-oranges comparison."

So why did the Pentium 4 utterly fail to hit 10GHz? How did Intel pull the collective wool over the eyes of the technical press, unintentionally or otherwise? Why did Tejas, the successor to Pentium 4 that expanded on NetBurst, get so callously cancelled?

However, AMD did a very good job of debunking the 'megahertz myth'. It proved, at least to sophisticated users, that performance of a chip wasn't just about clock speed. It did a great job of branding itself as a superior technology with 64-bit, and it showed that efficiency could make for a processor that was easier to integrate. As Intel pushed BTX as a format that would solve heat problems on processors, AMD pointed out that none of its own processors had heat problems.

And eventually, the lack of efficiency undid it. Reviewers went to town on Intel as the Pentium 4 progressed, because the heat went past the reasonable stage, making it a difficult processor to work with, entailing loud and bulky machines.

The inefficiency of the NetBurst architecture was becoming ever-more apparent, and AMD provided better competition. Frankly, NetBurst was wrong. Intel was betting that media and online programmes would make great use of the long pipeline in the architecture, but that turned out not to be the case, as performance suffered from repeated pipeline stalls. The one aspect of NetBurst that was beneficial - HyperThreading, which was the forerunner of dual-core - proved to be the stepping stone to the next-gen architecture, rather than the saviour of the P4.

What also changed was usage. It was all very well having faster processors, but how fast did users really need to run Office? The bottleneck on net speed has never been the processor! At the same time, we wanted to do more things at once, which lent itself to more cores rather than more raw speed.

The final nail in the coffin was Pentium M. The spiritual successor to the Pentium 3, it proved that Intel could still make fast, efficient chips that were architecturally more elegant and got the job done. Once we saw that, why would we continue to put up with Pentium 4?

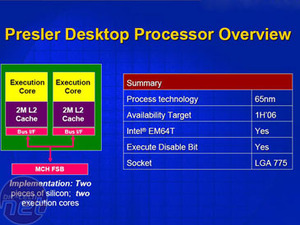

The successor to Pentium 4 inside Intel, Tejas, was scheduled to be released in mid-2005, but was cancelled as the rampant success of Pentium M became apparent. NetBurst development was ended, and Intel's internal focus shifted to creating a desktop chip out of the new mobile architecture.

Now we have Core 2 Duo, and Pentium 4 is consigned to history. Will Intel hit 10GHz in the next 5 years? Who knows. With the Core microarchitecture, it surely has a better chance.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.