Amazon is rumoured to be looking towards ARM for the future growth of its cloud computing platform, hiring former Calxeda staff in what is being claimed as a plan to produce its own custom processors.

While best known as a bookseller turned almost-everything seller, a big part of Amazon's business is its cloud computing services. Originally built to serve Amazon's own needs, the platform was opened to the public as a new venture for the company and offers everything from on-demand computation in the Elastic Compute Cloud (EC2) to low-cost data storage in the Simple Storage Service (S3). To do that, Amazon needs a lot of servers - many of which value concurrency, the ability to run many low-horsepower computing threads simultaneously, over raw performance.

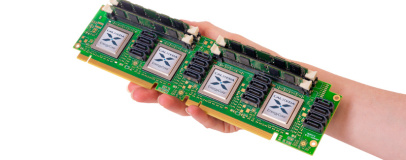

Accordingly, many see Cambridge-based ARM as a good fit: already making inroads into micro-server and cloud computing ventures, ARM's chip designs can't rival data centre giant Intel for performance but can run at a considerably lower power. Lower power means lower operating costs, and the ability to cram more physical processors - and thus run more concurrent threads - into the same space, power and thermal envelopes.

Thus it should be little surprise, perhaps, to learn that Amazon is one of the companies claimed to be investigating ARM in the datacentre. Gigaom has gathered evidence that the company has been hiring ARM processor experts, many of whom come from failed ARM server start-up Calxeda, with a view to building its own custom chips based around the ARM architecture.

Amazon, as is to be expected, has refused to comment on the site's claims, but the news comes as Google shows off a custom server motherboard based around IBM's Power8 architecture. With Google and Amazon representing two of the biggest single users of server components in the world, it wouldn't be surprising to see Intel making some dramatic changes to its Xeon family in the near future to better fight off the continued threat of non-x86 chips in the data centre.

While best known as a bookseller turned almost-everything seller, a big part of Amazon's business is its cloud computing services. Originally built to serve Amazon's own needs, the platform was opened to the public as a new venture for the company and offers everything from on-demand computation in the Elastic Compute Cloud (EC2) to low-cost data storage in the Simple Storage Service (S3). To do that, Amazon needs a lot of servers - many of which value concurrency, the ability to run many low-horsepower computing threads simultaneously, over raw performance.

Accordingly, many see Cambridge-based ARM as a good fit: already making inroads into micro-server and cloud computing ventures, ARM's chip designs can't rival data centre giant Intel for performance but can run at a considerably lower power. Lower power means lower operating costs, and the ability to cram more physical processors - and thus run more concurrent threads - into the same space, power and thermal envelopes.

Thus it should be little surprise, perhaps, to learn that Amazon is one of the companies claimed to be investigating ARM in the datacentre. Gigaom has gathered evidence that the company has been hiring ARM processor experts, many of whom come from failed ARM server start-up Calxeda, with a view to building its own custom chips based around the ARM architecture.

Amazon, as is to be expected, has refused to comment on the site's claims, but the news comes as Google shows off a custom server motherboard based around IBM's Power8 architecture. With Google and Amazon representing two of the biggest single users of server components in the world, it wouldn't be surprising to see Intel making some dramatic changes to its Xeon family in the near future to better fight off the continued threat of non-x86 chips in the data centre.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.