Advertising giant Google is going all-in on artificial intelligence, the company has announced, from a re-brand of its research department to next-generation Tensor Processing Unit (TPU) hardware which could see it take the top spot in the world supercomputer rankings.

During Google's IO conference late last night, the company made a range of announcements relating to artificial intelligence - including an impressive demonstration of the Google Assistant, its machine learning backed, voice-activated assistant system, making a live and interactive telephone call on its user's behalf to book a table at a restaurant. While the company's advances in AI are already being felt by its users, Google is looking to go still further - starting with a new name for its research and development division.

'Hmm, have I made a wrong turn? I was looking for Google Research,' jokes Google's Christian Howard on the company's official blog. 'For the past several years, we’ve pursued research that reflects our commitment to make AI available for everyone. To better reflect this commitment, we’re unifying our efforts under “Google AI”, which encompasses all the state-of-the-art research happening across Google. As part of this, we have expanded the Google AI website, and are renaming our existing Google Research channels, including this blog and the affiliated Twitter and Google+ channels, to Google AI.'

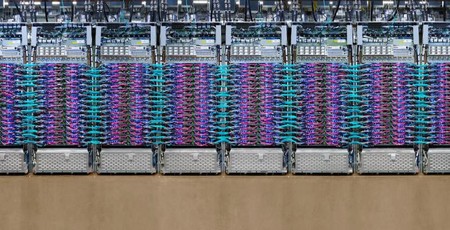

That focus on AI is nowhere more evident that in the company's hardware arm, which unveiled its third-generation Tensor Processing Unit (TPU) hardware during the event. Unveiled in its first-generation guise back in 2016, the TPU is a custom processor aimed at maximum performance for deep learning and other machine intelligence tasks. The company's second-generation implementation offered a considerable boost in performance, but its third generation really takes the cake: According to Google's internal testing, its accelerator pod of TPU 3.0 modules - which now boasts an integrated liquid cooling system - offers eight times the performance of last year's equivalent at a claimed 100 petaflops.

If so, a 100 PFLOP/S result would bump the TPU 3.0 pod above the current world's fastest super computer, The Sunway TaihuLight, which has a recorded maximum sustained performance score of 93 PFLOP/S - though a peak score of 125.4 PFLOP/S. Google's system, however, can't be directly compared to its supercomputer rivals, being as it is focused on machine learning tasks which are, to quote Google's Norm Jouppi, 'more tolerant of reduced computational precision'.

As with previous TPU releases, Google has not made any indication it will release the hardware to the public.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.