Microsoft unveils Project Brainwave neural network platform

August 23, 2017 | 11:35

Companies: #arm #intel #microsoft #microsoft-research

Microsoft has officially unveiled a new deep-learning acceleration programme, Project Brainwave, which it claims allows for real-time artificial intelligence with ultra-low latency.

Part of Microsoft's increasing efforts in the deep-learning and machine intelligence fields, Project Brainwave was formally unveiled at the Hot Chips conference late last night. The work of what engineer Doug Burger describes in his announcement post as the result of work by a 'cross-Microsoft team,' Project Brainwave is effectively a three-layer platform: A compiler and runtime engine allowing for rapid deployment of trained models, a hardware platform based around field-programmable gate arrays (FPGAs) which acts as a deep neural network (DNN) engine, and a fully distributed system architecture.

The result, Burger claims, is a major increase in performance and the ability to treat neural networks as hardware microservices - services called directly by a server with no intervening software layer. 'This system architecture both reduces latency,' Burger explains, 'since the CPU does not need to process incoming requests, and allows very high throughput, with the FPGA processing requests as fast as the network can stream them.

'We designed the system for real-time AI, which means the system processes requests as fast as it receives them, with ultra-low latency,' claims Burger. 'Real-time AI is becoming increasingly important as cloud infrastructures process live data streams, whether they be search queries, videos, sensor streams, or interactions with users.'

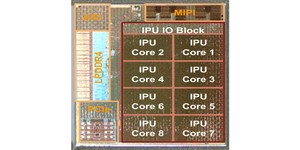

The platform leverages the Stratix 10 platform launched by Intel late last year, which bundles an FPGA with four ARM Cortex-A53 general-purpose CPU cores as a single system-on-chip. According to Burger, these bring notable advantages over other hardware DNN engines implemented as fixed application-specific integrated circuits (ASICs) like Google's Tensorflow Processing Units (TPUs): 'A number of companies—both large companies and a slew of startups—are building hardened DPUs. Although some of these chips have high peak performance, they must choose their operators and data types at design time, which limits their flexibility,' Burger explains. 'Project Brainwave takes a different approach, providing a design that scales across a range of data types, with the desired data type being a synthesis-time decision.

'The design combines both the ASIC digital signal processing blocks on the FPGAs and the synthesisable logic to provide a greater and more optimised number of functional units. This approach exploits the FPGA’s flexibility in two ways. First, we have defined highly customised, narrow-precision data types that increase performance without real losses in model accuracy. Second, we can incorporate research innovations into the hardware platform quickly (typically a few weeks), which is essential in this fast-moving space. As a result, we achieve performance comparable to – or greater than – many of these hard-coded DPU chips but are delivering the promised performance today.'

Burger claims Microsoft will have the Project Brainwave platform accessible to customers of its Azure cloud computing platform 'in the near future.'

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.