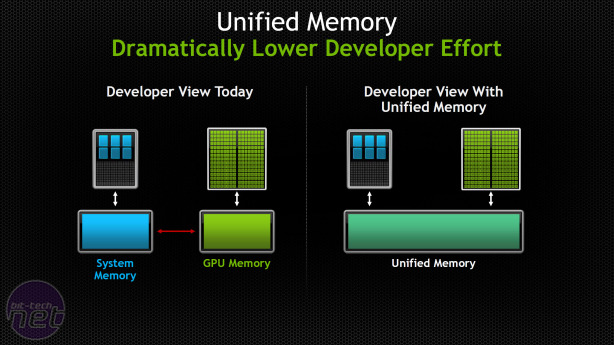

Nvidia has made a couple of announcements regarding its high-performance computing efforts ahead of the International Conference for High-Performance Computing that kicks off next week, the first of which is unified memory support in CUDA 6.

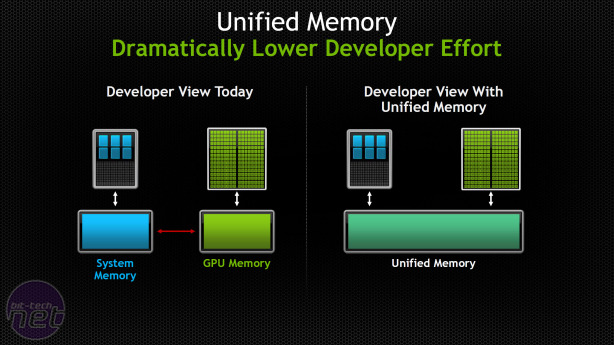

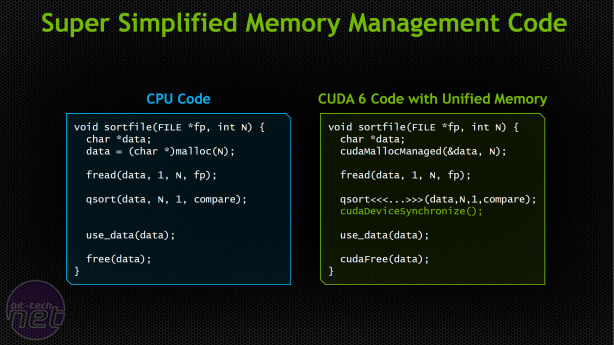

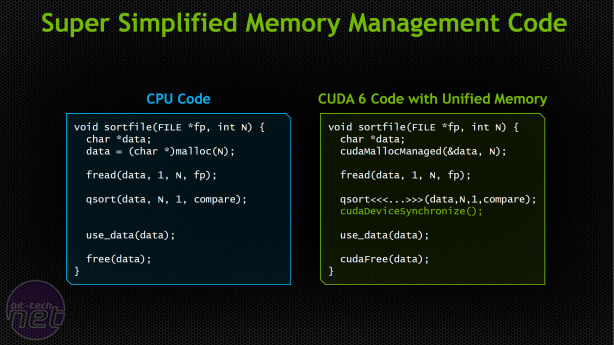

The hope is that this will greatly ease the process writing programs that use CUDA, by simplifying the process of managing memory access.

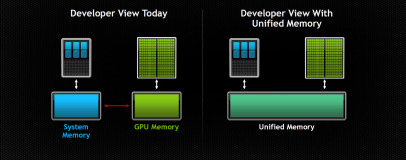

Previous implementations of CUDA have relied on the programmer to manage the exchange of information from CPU/system memory to GPU memory. This creates a sizeable and somewhat unnecessary overhead for coders. With the new unified memory system, though, programmers can access and operate on any memory resource, regardless of which pool of memory the address actually resides in.

The system doesn't actually eliminate the physical requirement to copy the memory contents from one pool to another but does remove the need for programmers to manage that part of the process - CUDA 6 does it automatically.

This is contrast to the unified memory implementation that AMD has been lauding on its upcoming Kaveri APUs. Those chips actually use the same block of memory, eliminating the need to copy the data from one block to another entirely.

Indeed it is quite possible that the new CUDA 6 solution will have a negative impact on performance, as finer control of memory management is taken away from the program. However, coders will still have the option to manually control memory if needed, while providing a simpler solution for those that don't require more granular control.

Also announced today is that CUDA 6 will include new BLAS and FFT libraries that a tuned for multi-GPU scaling, with them optimised to support up to 8 GPUs in a node.

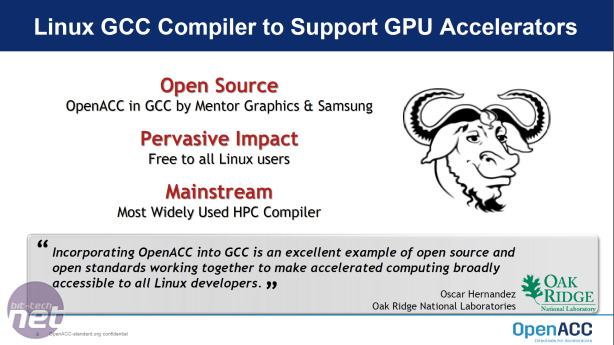

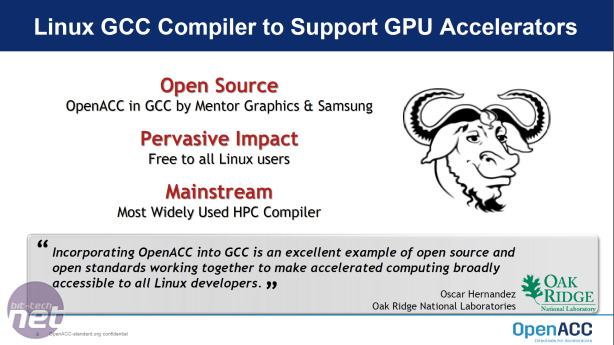

Today's final announcement regarding Nvidia and HPC is that the open source parallel programming standard OpenACC (that support Nvidia GPUs), will be incorporated into the GNU Compiler Collection (GCC) bringing GPU acceleration support to the popular compiler.

The hope is that this will greatly ease the process writing programs that use CUDA, by simplifying the process of managing memory access.

Previous implementations of CUDA have relied on the programmer to manage the exchange of information from CPU/system memory to GPU memory. This creates a sizeable and somewhat unnecessary overhead for coders. With the new unified memory system, though, programmers can access and operate on any memory resource, regardless of which pool of memory the address actually resides in.

The system doesn't actually eliminate the physical requirement to copy the memory contents from one pool to another but does remove the need for programmers to manage that part of the process - CUDA 6 does it automatically.

This is contrast to the unified memory implementation that AMD has been lauding on its upcoming Kaveri APUs. Those chips actually use the same block of memory, eliminating the need to copy the data from one block to another entirely.

Indeed it is quite possible that the new CUDA 6 solution will have a negative impact on performance, as finer control of memory management is taken away from the program. However, coders will still have the option to manually control memory if needed, while providing a simpler solution for those that don't require more granular control.

Also announced today is that CUDA 6 will include new BLAS and FFT libraries that a tuned for multi-GPU scaling, with them optimised to support up to 8 GPUs in a node.

Today's final announcement regarding Nvidia and HPC is that the open source parallel programming standard OpenACC (that support Nvidia GPUs), will be incorporated into the GNU Compiler Collection (GCC) bringing GPU acceleration support to the popular compiler.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.