Nvidia has announced that it is all-in on powering the future of deep neural networks (DNNs) and artificial intelligence (AI), announcing new tools and major partnerships with Chinese technology giants.

As major technology companies look to find new areas of growth to satiate their investors, artificial intelligence is emerging as a strong contender. Google, Microsoft, and Intel have all been pushing their own software and hardware as the future of AI, and even companies like mobile giant Huawei is predicting an explosion of interest in the field. Nvidia, naturally enough given its production of high-performance massively-parallel processors originally developed for 3D acceleration but now finding use for general-purpose compute acceleration, is among these - and during a two-hour keynote at the GPU Technology Conference (GTC) in Beijing company chief executive and founder Jensen Huang highlighted some of his company's most recent advances.

'At no time in the history of computing have such exciting developments been happening, and such incredible forces in computing been affecting our future,' Huang told attendees at the event. 'What technology increases in complexity by a factor of 350 in five years? We don’t know any. What algorithm increases in complexity by a factor of 10? We don’t know any. We are moving faster than Moore’s Law.'

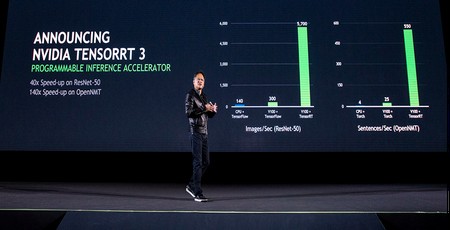

A key feature of Huang's speech was the announcement of TensorRT 3, an inferencing platform which is claimed to allow a previously-trained deep neural network (DNN) to run in a production environment at a rate of 45,000 images per second on the company's previously-announced HGX server packed with eight Tesla V100 GPGPU accelerators drawing 3kW of power where a traditional CPU-based platform would require 160 dual-CPU servers drawing 65kW to achieve the same performance. This inferencing tool is joined, Huang explained, by the DeepStream software development kit (SDK) for GPU-accelerated video analytics and a new release of the company's proprietary Compute Unified Device Architecture (CUDA) GPU-offload programming language.

Bigger news than new technology launches, however, was the revealing of deals with major Chinese technology companies. According to Huang, Nvidia's Tesla V100 cards have been deployed within Alibaba, Baidu, and Tencent's cloud platforms, while Huawei, Inspur, and Lenovo have all adopted the HGX server architecture. In addition, Huang explained, China's Big Five internet and AI companies - Alibaba, Tencent, Baidu, JD.com, and iFlyTech - have already adopted the TensorRT 3 platform.

'Our vision is to enable every researcher everywhere to enable AI for the goodness of mankind,' claimed Huang in conclusion. 'We believe we now have the fundamental pillars in place to invent the next era of artificial intelligence, the era of autonomous machines.'

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.