HyperTransport 3.0

AMD's use of the open architecture, HyperTransport, has been updated from a 1GHz or 2GT/sec (Gigatransfers) to 2.0GHz or 4GT/sec on the AM2+ platform. Some CPUs have been tipped to run as high as 3.5GHz or 7GT/s, but that's not officially confirmed at the moment.AM2+ CPUs require an AM2+ motherboard to achieve these speeds and any combination of old AM2 technology with new will still be backward compatible, but will be clocked down to the slower speeds.

By increasing the HyperTransport speed, this allows for far more bandwidth between the cores to the peripheral devices (most notably graphics), helping to alleviate bottlenecks.

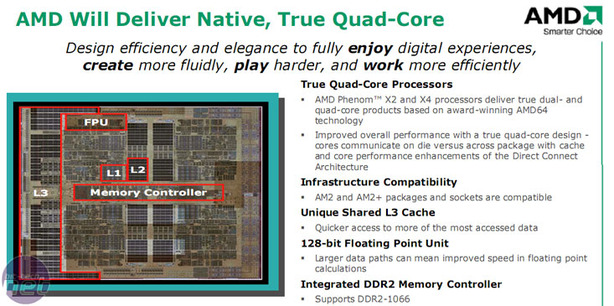

Shared L3 Cache

AMD is not the first to employ L3 cache on a consumer CPU. A few Intel Extreme Editions have previously been Xeon (Galatin) cores with 2MB L3 cache on to improve performance slightly over the standard Pentium 4s.Unfortunately in a single CPU, single socket environment the performance gain is extremely negligible because with each additional level of cache requires an increase in latency to access it. It’s still faster than a memory access, but performance gain was only in the region of five percent. This is a massive price to pay in millions of transistors for such a limited performance difference.

In comparison, in a multi-core environment when many CPUs are trying to fight to access memory and cache to keep them fed with information so they perform to their maximum potential, it’s advantageous but expensive to include another level of cache as a common information storage. Not only that but it’s also a place to throw information other cores may need or have requested.

By having a non shared and exclusive L1 and L2 cache the CPUs appear like independent units, and there’s no fighting for cache access or overwriting cache data from another core before it's had a chance to use it. AMD argues that programs have, for many years, been designed with a typical 512K of L2 cache in mind, and although more cache is better, it does provide diminishing returns the larger you go.

A shared large L3 cache takes some of the heat off the memory controller, acting like internal, communal splash memory for programs being run right at that moment, rather than global storage. In some cases it does increase latency because it’s yet another place to look, but it does also mean the many cores have the opportunity of looking there first instead of diving straight to memory, filling up the tubes and getting in each other’s way.

Fiscally speaking, less cache saves AMD and subsequently its consumers’ money, because the CPUs include fewer transistors which makes the die smaller.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.