Changing Perspective: SMP (Simultaneous Multi-Projection)

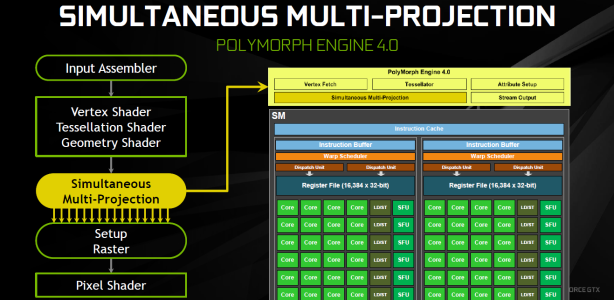

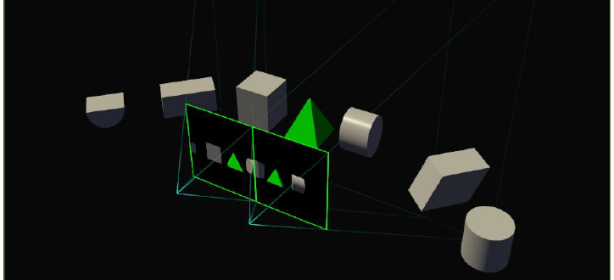

We said earlier that Pascal was effectively the same at a high level as Maxwell, but there is one small change worth going into. Nvidia has added a new Simultaneous Multi-Projection (SMP) block into the PolyMorph Engine 4.0. It's inserted into the pipeline after vertex, tessellation and geometry operations have been performed but before rasterisation and pixel shading.The SMP is able to take the processed geometry data of a scene and generate up to 16 different projections of it, each sharing the same centre (or viewpoint – effectively wherever the player is physically positioned in the scene). Furthermore, it can do this for up to two different projection centres offset along the x-axis, so up to 32 times in total, all without once having to re-run the initial geometry processing. In theory, it offers up to a 32x reduction in geometry work, although it won't be implemented to this degree initially.

If you're gaming on a single, flat screen, then SMP offers no advantage. However, Nvidia Surround, curved panels, VR and even future applications like augmented reality and hologram projections all stand to benefit from it.

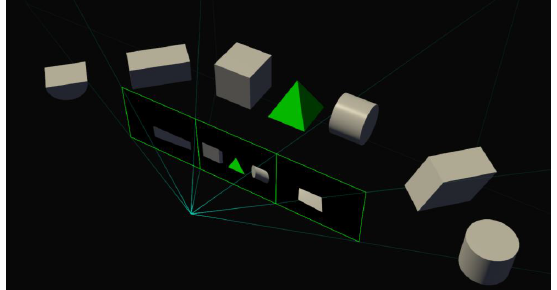

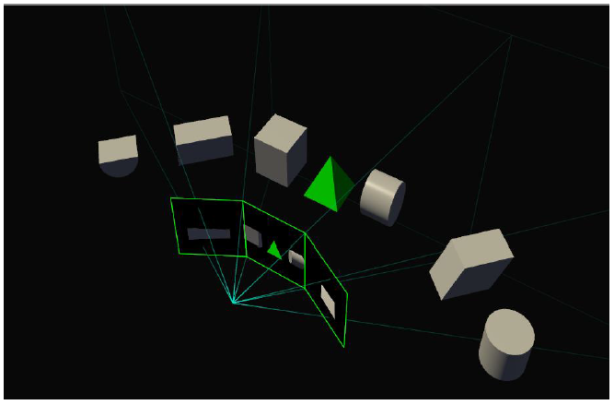

Take a triple 1080p screen setup, for example. Currently, this is rendered as if it were a single 5,760 x 1,080 flat surface, and if a user arranges their monitors side-by-side in a flat plane the way they view a scene will be geometrically accurate (ignoring any bezel-based issues, at least). However, this takes up excessive desk space and your field of view is limited. As such, most users angle the side screens towards them, but this results in a distorted, stretched image – the game is still rendering as if you're looking at a flat plane. Until SMP, the only way to solve this would be to reprocess all the geometry data once for each screen, which is far too expensive. Now, however, SMP can generate the three correct perspectives in a single pass for a much more accurate depiction of the scene from the player's viewpoint, whichever screen they're looking at. Nvidia calls this new feature Perspective Surround, and users will able to tell the driver the tilt angle that best matches their physical setup.

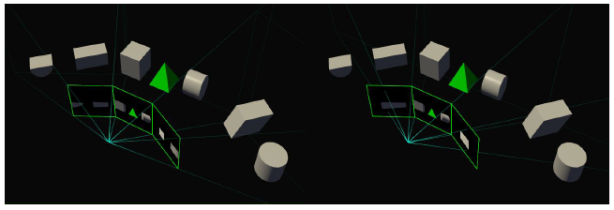

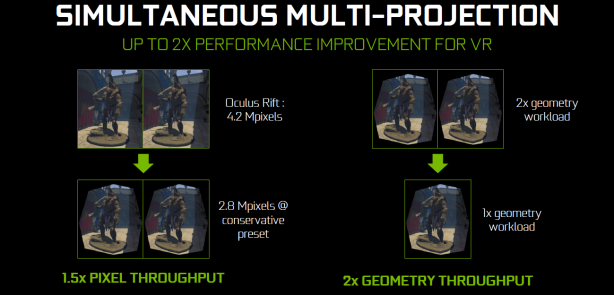

The main SMP application, however, is going to be VR. As we know, VR requires two separate projections for a scene – one per eye – which are typically rendered separately. Since SMP supports two separate projection centres, it allows the scene submission, driver and OS scheduling and geometry processing to run just once while outputting two positions per vertex without additional cost, and this is known as Single Pass Stereo.

SMP's role in VR doesn't end there. In VR, after the final image has been rendered, a post-process distortion is applied so that, when viewed through the headset's lens (a necessity thanks to the closeness of your eye to the display), the distortions cancel out and the view looks normal. However, the distortion process results in the final image having considerably less pixels than were rendered – a waste of resources, essentially.

Now, as we learnt, for each of the two viewpoints, the SMP engine can generate up to 16 perspectives. Using this, Nvidia has developed Lens Matched Shading (LMS). This divides the display region into four sections, each with its own perspective. Nvidia can tweak the parameters of these perspectives based on the distortion properties of the lens being used such that the rendered image more closely matches what the final, post-processed one will look like.

The examples Nvidia supplies are based on Oculus Rift parameters. Without LMS, you'd be rendering a 2.1MP image for a final image just 1.1MP in size. With LMS applied, the rendered image is reduced to 1.4MP, giving Pascal GPUs up to 50 percent extra pixel shading throughput in VR. This is in addition to the up to 2x performance in geometry throughput from Single Pass Stereo.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.