Some history

How about a quick history lesson then? 3D accelerated graphics were first introduced in 1997, with the first 3Dfx Voodoo card. This accelerated just pixel processing and rasterisation. Following this, the GeForce 256 GPU was the very first card to fully accelerate a whole scene from start to finish, using hardware-based transform and lighting.The GeForce 2 card was a faster version of the 256. However, the card was still fixed function - programmers had to write routines which could execute using routines built into the card. The technology was concurrent with Direct X 7.

The GeForce 3, then, was the first programmable GPU, allowing game developers to do whatever they wanted with the card. This breakthrough came as DirectX 8 introduced the first widely-used iteration of shaders, Model 1.1.

The GeForce 3 was concurrent with the Radeon 8500. The 8500 was slightly later to market, and supported Shader Model 1.4, which had been implemented with the revision of DirectX to version 8.1.

NVIDIA then introduced the GeForceFX, which was a DirectX 9.0 part, and ATI the 9700. Both these cards supported DX9 and Shader model 2.0, with NVIDIA also supporting the 2.0a revision for a few extra features (although it was dog-slow).

So we come to today, where we have the GeForce 6 and 7 series, which support DirectX 9.0c and Shader Model 3.0. The current Radeon line is the X800, which also supports DirectX 9.0c but only Shader Model 2.0b.

Got that? Good.

So what the heck do these things do?

What are shaders?

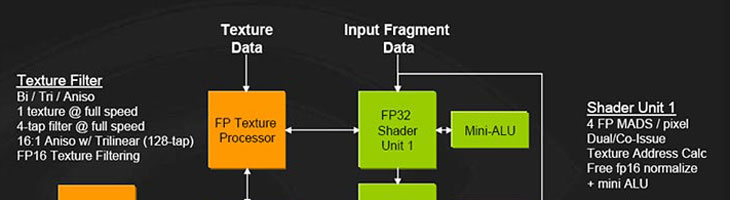

Shaders are small programmes that alter pixels or vertices. Consequently, there are two major types of shader - pixel shaders and vertex shaders. These programmes add effects to basic geometry - so a water-styled shader can be added to a flat blue texture to make it look reflective, or a glass-styled shader can be added to a polygon to make it appear transparent. These shaders are the key to what makes the graphics in games today look so much better than the graphics in games from 3, 4 or 5 years ago. These shaders are programmable, so games developers can make their own, allowing them to create a unique look and feel for their game. Companies like NVIDIA and ATI have standard libraries of shaders optimised to run on their cards, and these are also used by programmers where they don't need to create a particularly unique kind of effect.

So when are the different shader models used, and what difference do they make?

The main differences between them can be summed up fairly easily. Each revision has allowed for the length of a shader - the number of separate instructions that can be executed - to be increased, allowing for increasingly complex and sophisticated effects to be created. The ways in which the programmes can be written has also improved - the 1.1 specification provides just for straight code, whilst the 2.x revisions allow for some looping to take please to repeat lines of code. The 3.0 model allows for dynamic branching along the lines of if -> then statements in code. Increasingly smooth techniques for cutting the length of code have been made, allowing for speed improvements. Possibly the biggest single change between the 2.x and 3.0 specification is the addition of Vertex texture lookups in 3.0, which allows for displacement mapping to take place.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.