Foreword

by Richard SwinburneRyan Leng is an independent technology consultant and auditor for corporations in computer systems involving hardware, software, networking, security, IT policy formulation and training.

Originally trained in Computer Science and Economics, he has since worked in many fields relating to computer hardware integration, software design and engineering, surveillance systems, advertising, multimedia production, user-Interface engineering and graphic designs.

Ryan approached bit-tech with his unpublished book on DDR technology a short while ago, and when we read it we were greatly impressed with the depth and attention to detail, as well as being able to convey complex ideas in an easy to understand manner.

Whether you are adept in the knowledge of memory technology, it’s still an interesting read for a fundamental part of a PC architecture. We’ve cut his work up into several parts, the first of which we’ve published here covers the basics. The information published here is merely an excerpt of a much larger document.

Ryan explains, ” The aim of this paper is to share my knowledge and experience with others about memory technologies, especially Double-Data Rate (DDR) RAM that I have worked with extensively. Many topics including generational differences, trends, DDR signal management techniques, system optimisation strategy, memory compatibility problems and purchasing considerations are discussed.

It is also my hope to help explain some memory related technologies that may have been vaguely or incorrectly explained in some online communities and “tech” sites. Many specifics and highly technical information have been excluded in order to keep this article more manageable and most importantly, easier to digest for the casual readers.

This paper began while doing research into severe DDR2 memory compatibility problems (at 800MHz and beyond) with products from Asus, Abit, Biostar, DFI, Corsair, Kingston, A-Data and more. There are rising uncertainties with DDR memory technology, which memory system designers have always knew about and are finding it increasingly challenging to mitigate and resolve.

Why is this important to the average user and enthusiasts? RAM stability problems and overclocking limitations are different sides of the same coin. Know how to test for and resolve compatibility issues can help improve users' knowledge in overclocking your RAM.”

We’d like to thank Ryan for coming to us with his expert material, and we wish him all the best with his future work.

The Basics

The industry body that governs memory technology standards is known as the Joint Electron Device Engineering Council (JEDEC). In the recent years, the name has been changed to “JEDEC Solid State Technology Association”. JEDEC is part of a larger semiconductor engineering standardisation body known as the Electronic Industries Alliance (EIA). EIA is a trade association that represents all areas of the electronics industry.All manufacturers involved in the computer you are using now are members of EIA and JEDEC. Since 1958, JEDEC has been the leading developer of standards for the solid-state industry. Over the course of 15 years, DRAM performance has increased by a factor of 4,000%. Yet, the design has remained relatively simple. This has been intentional.

The most fundamental aspect of RAM technology is the requirement to have constant power in order to retain data. The process is known as Self-Refreshing. Economic forces have always driven memory systems design, simply because there has been so much investment in the existing infrastructure. The majority of the complexity is consciously pushed into the memory controller instead. This allows DRAMs to be manufactured with relatively good yield, thus making them fairly inexpensive. Often the main objective of a consumer product is to offer products to the market as inexpensive as possible offering the maximum adoption, rather than something that is technically superior but changes the baseline.

According to Graham Allan and Jody Defazio from MOSAID Technologies Incorporated, “The current market leader, the DDR2 SDRAM, offers security of supply, high storage capacity, low cost, and reasonable channel bandwidth but comes with an awkward interface and complicated controller issues.”

For purpose of clarity, SD-RAM will be denoted as “SDR”, the first generation of DDR SD-RAM will be represented by “DDR1”, while “DDR” will represent the family of memory technologies relating to the Double-Data Rate standard.

Measurements

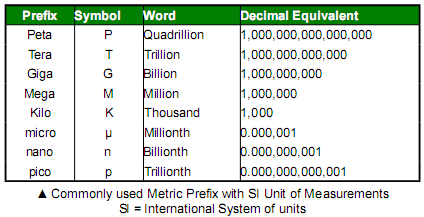

When discussing memory, we can't escape the terms such as clock cycles, timing, Megabits per second (Mbps) and Megahertz (MHz). They are all related and used to explain the concept of speed for memory systems under different situations.

MegaByte (MB) and GigaByte (GB) are usually used to describe the storage capacity. Bandwidth and Speed have distinctive units of measurement, the difference is in how we describe system performance, not only limited to the memory but also other parts of the computer.

One is used to illustrate the amount of data being sent (bits), which is normally calculated with units over a given period of time (bits per second). The other is the measurement of how fast the data flows (Hz). More units of data can be carried in a second with a faster ‘flow’. When discussing about speed in memory systems, these two are often used interchangeably.

Advanced users would be aware of an additional unit-of-measurement known as Clock Cycle (CK or tCK). This is used to describe, but is not limited to, memory latencies. They are the necessary delays during changes in memory operation states where the shortest delay translates to faster memory performance. In addition, a clock cycle can be converted into nanoseconds (ns). More on this later.

An Analogy - On The Road Performance

Memory pathways are similar to roads. Bandwidth (bits per second) is similar to the maximum number of cars that a section of the road can handle, at a given time. It is directly related to the width or amount of lanes available.The frequency (Hz) is equivalent to the maximum speed limit imposed on those cars. A higher speed limit will allow more vehicles travel at a faster rate. However, collisions may occur more frequently. Memory latencies can be thought of as junctions with traffic lights on these roads. They cause delays in order to avoid vehicle collisions. Shortening the wait period will increase the flow of vehicles, however this is on the condition that they are ready and have enough time to move.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.