Researchers unveil performance-boosting memory compression

April 16, 2019 | 11:09

Companies: #massachusetts-institute-of-technology

Researchers at the Massachusetts Institute of Technology (MIT) have proposed a new data compression technique, applied at an object level, which is claimed to considerably boost performance across all programming languages.

While memory bandwidth increases at a regular pace, it remains a serious bottleneck for high-speed computation - the primary reason for more powerful processors to include larger amounts of extremely high-speed but expensive cache memory. For many programs, waiting to transfer data from main memory to the processor and back again can take up a considerable amount of its run time. One solution to this problem is in-memory processing, adding logic elements to the memory itself so the most common data processing tasks can occur in-place; another is to reduce the amount of data that actually has to be shuffled around, and it's this latter approach that inspired a new compression technique at MIT.

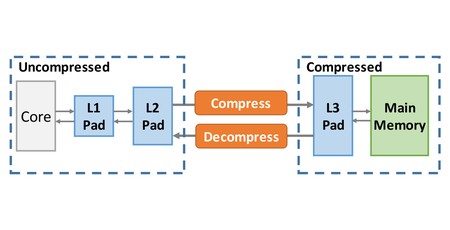

'The motivation was trying to come up with a new memory hierarchy that could do object-based compression, instead of cache-line compression,' explains lead author Po-An Tsai, a graduate student in the Computer Science and Artificial Intelligence Laboratory (CSAIL), in an interview with MIT News, 'because that’s how most modern programming languages manage data.'

'All computer systems would benefit from this,' adds co-author Daniel Sanchez, professor of computer science and electrical engineering and a researcher at CSAIL, of the pair's work. 'Programs become faster because they stop being bottlenecked by memory bandwidth.'

Compatible with, the researchers claim, any modern programming language which uses objects - and demonstrated using a modified Java virtual machine - the compression system is considerably more efficient than traditional cache-based compression. During testing, the pair claim to have seen a rough doubling in the amount of data which can be compressed and a corresponding halving of the amount of memory required by the program - and, thus, the amount of data which has to be shuffled into and out of said memory.

The result, in the Java VM-based testing, is a claimed up-to-2x and average 1.63x boost in compression ratio when compared to existing state-of-the-art compressed memory hierarchy implementations and a 56 percent reduction in memory traffic, which directly led to a 17 percent performance boost in the program itself - despite testing across both array- and object-dominated workloads, rather than concentrating solely on the latter where the new technique would show the biggest improvement.

The pair's paper, 'Compress Objects, Not Cache Lines: An Object-Based Compressed Memory Hierarchy', is available from MIT's CSAIL (PDF warning.)

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.