Making GPU Computing a standard

Nvidia has talked about hardware support for double precision in the past—especially when Tesla launched—but there are no shipping GPUs supporting it in hardware yet, but we were under the impression that the G9x GPUs supported the technology through emulation.David confirmed that this was the case "There's an emulation library for development of software," he said. "It's really not meant to run the real application – it's meant for application development because our next products will support double precision."

I asked David if when he said next products, he meant the next generation architecture that Jen-Hsun Huang referred to as Tesla 2 in the recent Financial Analyst's Day, right. "Yes, that's right," said David. "It will support double precision as a real product that people could really build double precision [software] products out of."

For those unaware of what double precision can be used for, it allows you to increase the range of magnitudes that can be represented by the data. "Consumers don't need double precision," he said. "It's mainly going to be used in the HPC space to improve computational speed. As a result, we're going to need to make the Tesla customers pay for that silicon on every chip until there is demand for it in the consumer space. That demand is kind of hard to imagine at the moment though.

"I can’t predict the future because I don't know, but I would imagine that double precision will be supported across all products. However, if you add something like ECC, I would imagine that it would only be supported on the professional series products [i.e. Quadro and Tesla]."

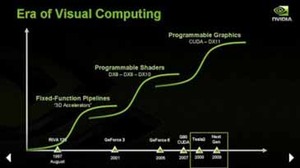

Following this, we moved the discussion towards CUDA, as it seemed like a natural progression. David talked about expanding CUDA other hardware vendors, and the fact that this is going to require them to implement support for C. He said that current ATI hardware cannot run C code, so the question is: has Nvidia talked with competitors (like ATI) about running C on their hardware?

"I can't really comment on conversations we have with partners and competitors," said Kirk. "We do take every opportunity to discuss the ability to run CUDA with anyone who's interested. It's not exactly an open standard, but there's really not very much that is proprietary about it. Really, it's just C and there are these functions for distributing it.

Could ATI enable support for C (and CUDA) on future

Radeon, FireGL and FireStream products?

So what will it take to make CUDA a standard, or at least the ability to run general computing tasks written in C on a GPU? After all, Nvidia has talked about several new 'killer' applications coming along (such as PhysX and RapiHD) – does David see these as catalysts for the shift to massively parallel computing?

"I think that the video encoding application will touch a lot of people. Other kinds of things like plugins for Photoshop and tools for other tasks that people do will accelerate the shift," said David. The discussion then moved onto video encoding and how the task is broken up into small pieces that are executed sequentially.

During one of the SSE4 briefings at IDF Fall 2007, I saw a slide with a diagram showing the how SSE4 massively improved performance in video encoding applications – I remember asking myself: why isn't this being done on a GPU? I could see the potential to run this much faster and Nvidia's Financial Analyst Day demonstration was a great proof of concept.

"Exactly, it's so much easier and faster. The same is true with photos as well, as you're essentially doing the same thing when you're manipulating images—just with a still instead of a motion picture. You should ask the CPU guys whether or not it'll be faster manipulating photos on a CPU or GPU," David joked.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.