Where we're heading

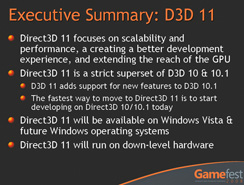

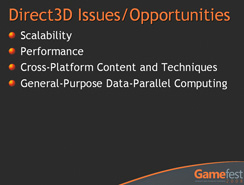

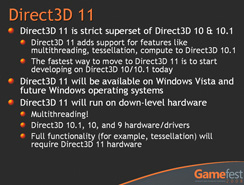

Microsoft said from the outset that DirectX 11 will be an expansion of DirectX 10.1, adding new functionality and features, while also focusing on improving scalability and also efficiency for both for the developer and the hardware.I'm being careful with my words here, because Microsoft promised big speedups with DirectX 10 when really what it did was enable the hardware to be more efficient and basically do more stuff at once. Performance, in terms of raw frames per second, didn't improve but DX10 made things possible that weren't before because of the implications and inefficiencies related to completing those operations in DX9.

While I'm not expecting a raw frame rate jump with DirectX 11, the features being added and the conversations I've had with a few developers on the DirectX advisory board suggest that developers should be able to do more with the new API because it will be more scalable and efficient than what's currently available. In that sense, it's like where DirectX 10 ended up – doing more without suffering too many consequences for doing so.

The new API is expected to ship with Windows 7 (which is expected in late 2009/early 2010), but the good news is that DirectX 11 will also run on Windows Vista. On that note, we're hoping that DirectX 11 won't be given the same treatment as DX10 because backwards compatibility is there and, as you'll see over the coming pages, there are some features that could even prove beneficial to current DirectX 9.0 and 10/10.1 hardware.

DirectX 11 pipeline

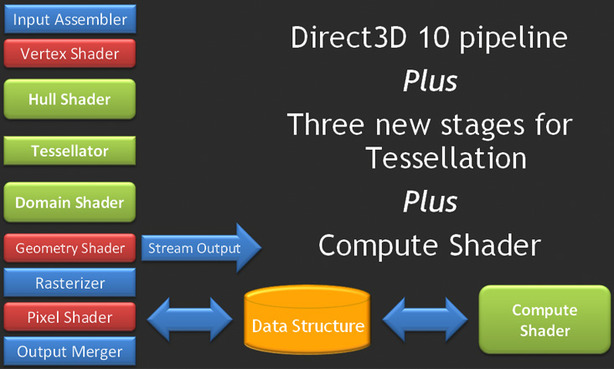

The emphasis for the new API has been placed on content authoring, enabling developers to create more complex and realistic characters. This fits right in with Microsoft's push to improve scalability and efficiency because the current trend has been for developers to build characters with increasingly complex meshes of triangles, and then reducing the number of triangles depending on the horsepower available in a particular system.To resolve this problem in particular, Microsoft is introducing three new stages in the DirectX 11 graphics pipeline: the hull shader, tessellator and domain shader – the hull and domain shaders basically facilitate the inclusion of the tessellator. If you take away these new stages, you end up with something that's very similar to the existing DirectX 10 pipeline – there are some slight tweaks that make them technically different though.

In addition to the three new stages, there have been some changes made to the pixel shader stage in the pipeline, which enables the compute shader for more general purpose computing tasks. One thing was evident from our discussions with various game and general-purpose application developers – there are an almost unlimited number of ways in which the compute shader can be used. As long as there is a way to massively thread an application, there is a use for the compute shader.

The DirectX 11 pipeline

Think of the compute shader a bit like OpenCL and you won't be far off – it will enable DirectX to become an industry standard for massively parallel general purpose computing tasks, while also giving game developers the freedom to access the massive amounts of compute horsepower available for other, non-graphics tasks. Currently, both AMD and Nvidia have their own respective GPU or stream computing initiatives that are available to developers to solve non-graphics problems, but they're importantly not compatible with one another. Intel is already talking about its own answer to the programming problems presented by massively parallel computing with Larrabee as well and it's unlikely to be compatible with either AMD's Stream SDK or Nvidia's CUDA platform.

This creates problems for application developers, because they have to decide whether they're going to focus on cross-platform compatibility—and potentially needing to write three 'different' applications for the different hardware—or whether they're going to shoot for maximum performance on one particular platform. We've already been talking about some of the potential problems presented by game developers using one of the two GPU-accelerated physics APIs in their games – you end up with one gamer getting a better experience than the other, depending on who they bought their graphics card from.

What's more, the developer is limited in what they can do with GPU accelerated physics, because they've got to cater for the lowest common denominator as well. In other words, really compelling gameplay physics can't happen for the most part because even a quad-core CPU will grind to a halt.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.